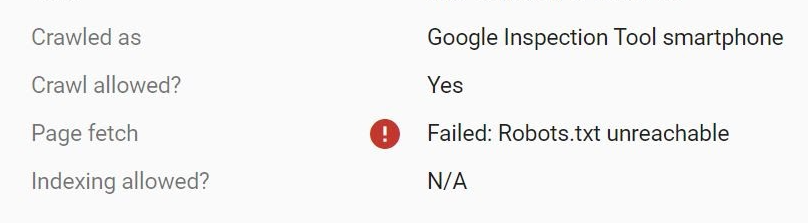

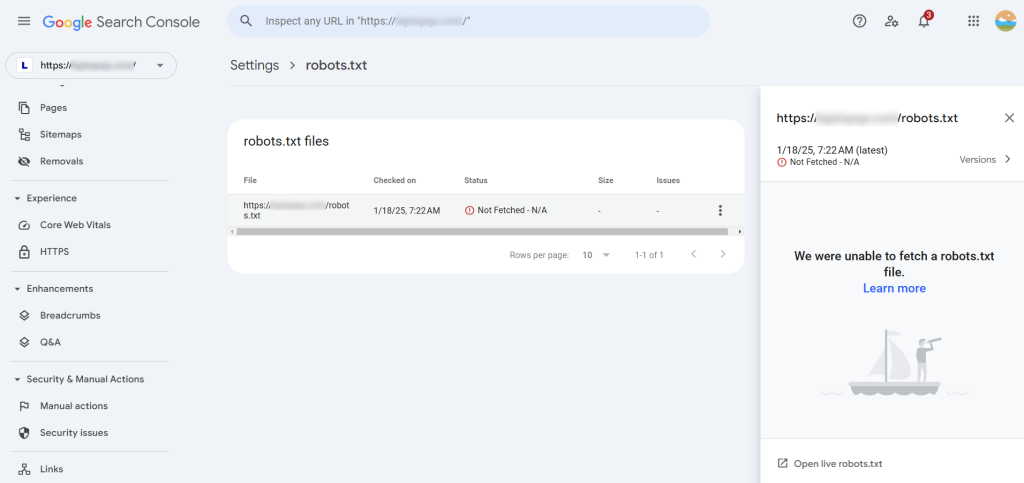

Here’s how I solved the Failed: Robots.txt Unreachable and Robots.txt Not Fetched errors in Google Search Console. The issue itself is serious, since it prevents Google bots from crawling and indexing your website and webpages.

The solution I found is to unblock Google bot IPs in your web hosting server’s firewall. Yes, although Google bot isn’t a “bad bot” it can get on the blacklist. For instance, like this:

So, contact your web hosting provider and ask them to check if the Google bot IPs are blocked on the firewall, and if they are ask for unblock. Also your web host may have a user interface where you can check and unblock the IPs yourself.

There are multiple Google bot IPs. They start with 66.249… Here is the full list of the IPs.

Of course, the fix works if you don’t have other issues with accessing the robots.txt and the website itself, such as DNS issues, file permissions, and similar.

Leave a Reply